In my previous post, I briefly discussed the rationale behind automated blur detection in digital imagery and did an overview of an algorithm that could be used to detect blur images. Here I will show some implementation details along with some C++ code snippets.

Experience tells us that blur images tend to contain less details then their sharper counterparts. And the areas where intensity transitions occur (e.g. the border of an object) are more well defined in clear images. Mathematically speaking, the slope of the intensity transition is statistically deeper in clear images than blur ones. Since whether an image is blurred or not is not affected by color space, it is sufficient to perform detection in the luminance space (i.e. gray scale images):

\[I=0.299R\times0.587G\times0.114B\]

Canny Edge Detection

Thus the very first step in deciding whether an image or an area within an image is blurred is to use some sort of edge detection algorithms to obtain a collection of the edges in the image.

Canny edge detection is a good candidate since it is optimal in terms of good detection and localization. And hysteresis is used to reduce streaking and thus Canny edge detection achieves relatively continuous edge boundaries comparing to other edge detection methods.

In one of my previous posts, I discussed Canny edge detection using auto thresholding utilizing Intel’s Integrated Performance Primitives (IPP). I used the same algorithm here for the image pre-processing process.

Hough Transform

In order to analyze the gradients along detected edges, it is necessary to first parameterize them. Hough transform comes in handy for this task.

While Hough transform is capable of identifying arbitrary shapes, for the purpose of detecting image blurs simple line detection is more robust. Besides, IPP has an implementation for line detection using Hough transform out of the box.

Since our goal is to determine the quality of the image within a region of interest, we do not need to analyze all the edges identified within that region. But rather, we could select a few based on some pre-determined criteria that would maximize our ability to correctly determine the luminance gradients.

The following code snippet shows my implementation of the edge parameterization method using IPP:

bool CompareIppiPoint(IppiPoint &p1, IppiPoint &p2) {

if (p1.x == p2.x) {

return p1.y < p2.y;

} else {

return p1.x < p2.x;

}

}

void IPPGrayImage::HoughLine(IppPointPolar delta, int threshold, int maxLineCount, int* pLineCount, IppPointPolar pLines[], list<IppiPoint> pList[]) {

IppStatus sts;

IppiSize roiSize = {_width, _height};

int bufSize;

sts = ippiHoughLineGetSize_8u_C1R(roiSize, delta, maxLineCount, &bufSize);

assert(sts == ippStsNoErr);

Ipp8u * pBuf = ippsMalloc_8u(bufSize);

Ipp8u *imgBuf;

int stepSize;

imgBuf = ippiMalloc_8u_C1(_width, _height, &stepSize);

sts = ippiConvert_32f8u_C1R(_imgBuffer, _width * PIXEL_SIZE, imgBuf, _width, roiSize, ippRndNear);

assert(sts == ippStsNoErr);

assert(imgBuf != NULL);

sts = ippiHoughLine_8u32f_C1R(imgBuf, _width, roiSize, delta, threshold, pLines, maxLineCount, pLineCount, pBuf);

assert(sts == ippStsNoErr);

//d=|x0 * cos a + y0 * sin a - p|

int val = 0;

float d;

for (int x = 0; x < _width; x++) {

for (int y = 0; y < _height; y++) {

if (imgBuf[x + y * _width] > 0) {

val = imgBuf[x + y * _width];

for (int i = 0; i < *pLineCount; i++) {

d = abs((float) cos(pLines[i].theta) * (float) x + (float) sin(pLines[i].theta) * (float) y - abs(pLines[i].rho));

if (d < threshold) {

IppiPoint p;

p.x = x;

p.y = y;

pList[i].push_back(p);

}

}

}

}

}

for (int i = 0; i < *pLineCount; i++) {

pList[i].sort(CompareIppiPoint);

}

ippsFree(pBuf);

ippiFree(imgBuf);

}

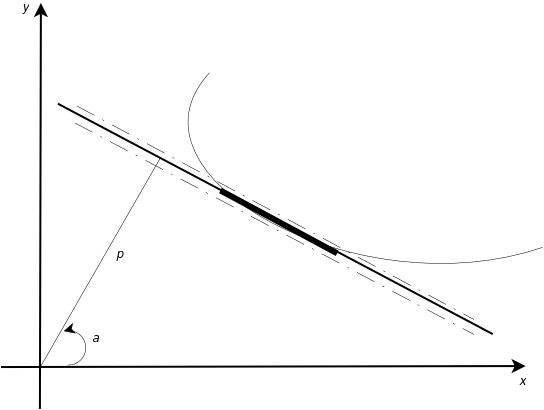

Note that the selection for delta is crucial for the line detection quality. In the code above, up to maxLineCount number of lines are detected and each line’s parameters are stored in pLines[]. The edge image pixel coordinates within a given range that is less than threshold are collected into the list. Because the Hough detection does not indicate the begin and the end point of a line, we need to iterate through the pixels in pList[] and find the sections that the detected lines pass through. The image below illustrates how the edge regions are chosen based on detected line parameters. Image features within a region between the dotted line are recorded based on the distances to the line defined by:

\[d=\vert x_0cos\alpha+y_0sin\alpha-p\vert\]

In practice, for each parameterized line I chose to select the longest section where the line approximates a section of the edge. Other line sections can be used as well, but the longest portion tends to offer better classification results. The code is shown below, and the thickened section in the figure above illustrates the selected section.

void LineUtils::GetLongestConnectedLinePoints(IppiPoint points[], int len, int threshold, IppiPoint lpoints[], int &maxLen) {

int maxIndexEnd = 0, curRunLength = 0, maxRunLength = 0;

lpoints[curRunLength] = points[0];

for (int i = 1; i < len; i++) {

if ((abs(points[i].x - lpoints[curRunLength].x) > threshold) || (abs(points[i].y - lpoints[curRunLength].y) > threshold)) {

if (curRunLength > maxRunLength) {

maxRunLength = curRunLength;

maxIndexEnd = i;

}

curRunLength = 0;

lpoints[curRunLength] = points[i];

} else {

if (curRunLength >= maxLen) {

maxRunLength = maxLen;

break;

}

curRunLength++;

lpoints[curRunLength] = points[i];

}

}

for (int i = maxIndexEnd - maxRunLength - 1; i < maxIndexEnd; i++) {

if (i - (maxIndexEnd - maxRunLength) - 1 < maxRunLength) {

lpoints[i - (maxIndexEnd - maxRunLength - 1)] = points[i];

}

}

maxLen = maxRunLength;

}

In my next post, I will continue with the gradient calculation and some methods to enhance the detection accuracies.